“0” discovered URLs problem on loading the sitemaps will occur under certain conditions, which seems to be a bug in Google Search Console. I will show you how to avoid the bug and successfully fetch the sitemap.

Detailed situation of 0 discovered URLs

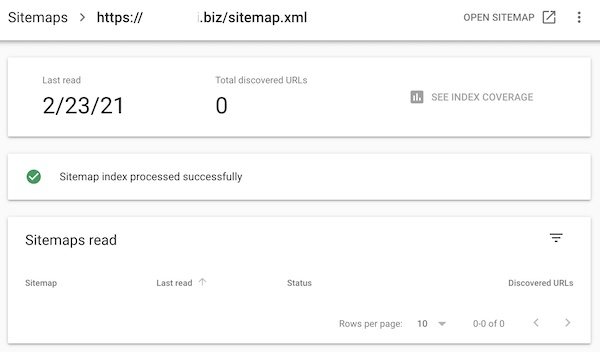

In the “Sitemaps” page of the Google Search Console, “Total discovered URLs” remains 0 even after waiting for several months, as shown below.

I met with the problem of “0”detected URL, when I created a sitemap using Google XML Sitemaps (or XML Sitemap Generator for WordPress) 4.1.1, which is a popular plugin in WordPress. This “sitemap.xml” is a sitemap index file, and no sub-sitemaps are read. I note that The ownership of the website was verified by the domain authentication method.

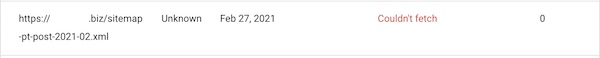

When I tried to register a sub-sitemap file, such as “sitemap-pt-post-2021-02.xml”, directly, Search Console returned “Couldn’t fetch” message and didn’t read it.

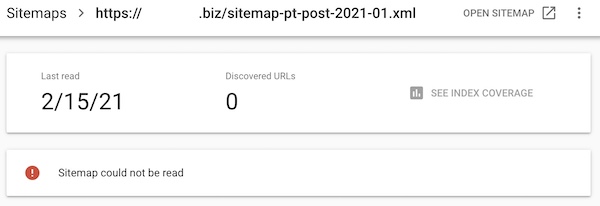

The details of the error were “Sitemap could not be read”.

So we can presume that the cause of this “0” discovered URLs is in the sub-sitemaps.

Why couldn’t sub-sitemaps be read

I made trials and errors to find out why the sub-sitemap couldn’t be read. Then I found the following:

- When the file name of the unread sub-sitemap was changed, the sub-sitemap was read successfully.

- When a soft link (another file name) was made on the unread sub-sitemap, the sub-sitemap was read.

It has been confirmed that the http headers are the same between the files. There is a file name dependency between readable and unreadable sitemaps. So we can say that the “0” discovered URLs problem is caused by a bug in the Search Console, but by a user misconfiguration (at least in my situation).

Next, I investigated which file name could be read and which file name could not be read.

Below, “o” is an ok example and “x” is a bad example.

—-

o https://abcdefgh.biz/sitemap_pt_postaaaa.xml

x https://abcdefgh.biz/sitemap-pt-postaaaa.xml

x https://abcdefgh.biz/sitemap-pt-post2021.xml

x https://abcdefgh .biz / sitemap-pt-post—-.xml

x https://abcdefgh.biz/sitemap-pt-post.xml

Underscores are ok, but hyphens are not ok.

o https://abcdefgh.biz/sitemap_pt_post.xml

o https://abcdefgh.biz/sitemap_pt_post2021.xml

x https://abcdefgh.biz/sitemap_pt_post20211.xml

x https://abcdefgh.biz/sitemap0pt0post20211.xml

x https //abcdefgh.biz/sitemap_pt_post20210.xml

x https://abcdefgh.biz/sitemap_pt_post202102.xml

x https://abcdefgh.biz/sitemap_pt_post20210200.xml

Long names are bad. The maximal length: the domain part is 21 characters long (“https://abcdefgh.biz/”), file name is 19 chars, and 4 chars for extension. 44 characters in total.

x https://abcdefgh.biz/sitemap_pt_post20211.xm

x https://abcdefgh.biz/sitemap_pt_post20211

o https://abcdefgh.biz/sitemap_pt_post2021

Extensions will not be counted. The file path will be limited to 40 characters in total except for the extension.

x https://abcdefgh.biz/sitemapaptapostaaaa.xml -> 19 chars

x https://abcdefgh.biz/12345678901234567890.xml -> 20

x https://abcdefgh.biz/1234567890123456789.xml -> 19

o https://abcdefgh.biz/123456789_123456789.xml -> 19

Even if the file name excluding the extension is 19 characters, it may not be good, but if you separate it with an underscore “_”, it will be ok.

x https://abcdefgh.biz/sitemapaptapostaa.xml -> 17

x https://abcdefgh.biz/sitemapaptapostaaa.xml -> 18

x https://abcdefgh.biz/sitemapapt-postaaaa.xml -> 19

o https://abcdefgh.biz/sitemapapt_postaaaa.xml -> 19

If you do not separate the file name with an underscore, even 17 characters file name is bad, but if you separate it with an underscore, 19 chars file name is ok. The hyphen delimiter is bad.

o https://abcdefgh.biz/sitemapaptp_ostaaaa.xml -> 19

o https://abcdefgh.biz/sitemapap_tpostaaaa.xml -> 19

x https://abcdefgh.biz/sitemapapt_postaaaaa.xml -> 20

x https://abcdefgh.biz/sitemap0pt0post2021.xml -> 19

Even if the file name is separated with an underscore, 20 characters filename is bad.

o https://abcdefgh.biz/_sitemapaptpostaaaa.xml -> 19

x https://abcdefgh.biz/sitemapaptpostaaaa_.xml ->19

Even if the delimiter position is located at the front, it is ok. If at the back end, it is bad.

x https://abcdefgh.biz/sitemapaptpostaaa_a.xml -> 19

x https://abcdefgh.biz/sitemapaptpostaa_aa.xml -> 19

o https://abcdefgh.biz/sitemapaptposta_aaa.xml -> 19

o https://abcdefgh.biz/sitemapaptpost_aaaa.xml -> 19

If _ is located before the 15th character (36th character from the beginning), it is ok. If it is located at 16th character or later, it is bad?

—-

So we can avoid these bad examples to make the Search Console read the sitemaps. I couldn’t assume the essential cause of this bug. I hope that Search Console engineers will use this information to fix bugs.

Workaround

The workaround is:

- Do not put hyphens in the file name.

- When the file name is long, separate it with an underscore.

If you’re having trouble with a similar issue, try this workaround.

Making readable sitemaps using Google XML sitemaps

If you are using Google XML sitemaps, you can avoid the “0” discovered URLs problem by modifying the plugin. The sub-sitemap names created by Google XML sitemaps are like this: “https://abcdefgh.biz/sitemap-pt-post-2021-01.xml”

If you adopt the above workaround, reduce the number of characters in the file name by replacing “sitemap” with “sm”, and replace the hyphen with underscore. For example, “https://abcdefgh.biz/sm_pt_post_2021_01.xml”.

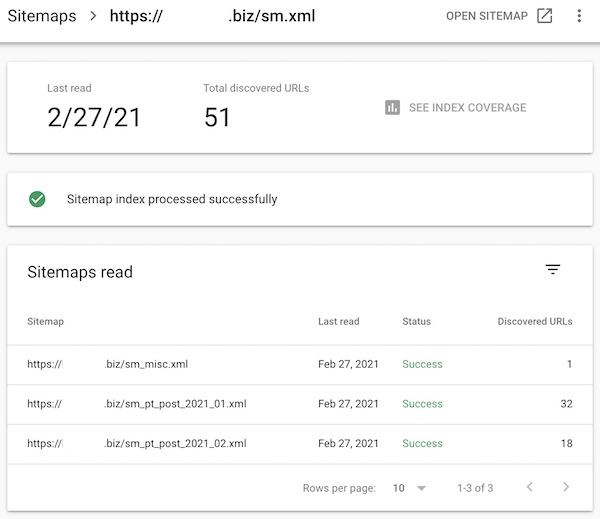

After modifying the plugin and registering the sitemap, the sitemap is now loaded successfully. It was read in a few minutes completely after being registered.

Summary

The “0” discovered URLs problem in the search console has been asked for many years on the community bulletin board of the search console. A common expert’s answer is that “it is due to the low site rank and slow crawl frequency, and the sitemap may be read after half a year.”, and is selected as a good answer and the thread is closed. However, at least in my environment, this problem has filename dependency, and assumedly is due to a bug in the Search Console. I don’t feel the bug will be fixed soon, because there are few people who have encountered the problem, but I hope that such information will be the trigger for the correction someday.